If you’ve been in SEO long enough (I’m entering year 17), you know that big shifts usually start quietly — one study here, one algorithm update there — until suddenly the entire industry is forced to evolve.

Well, we just hit one of those moments.

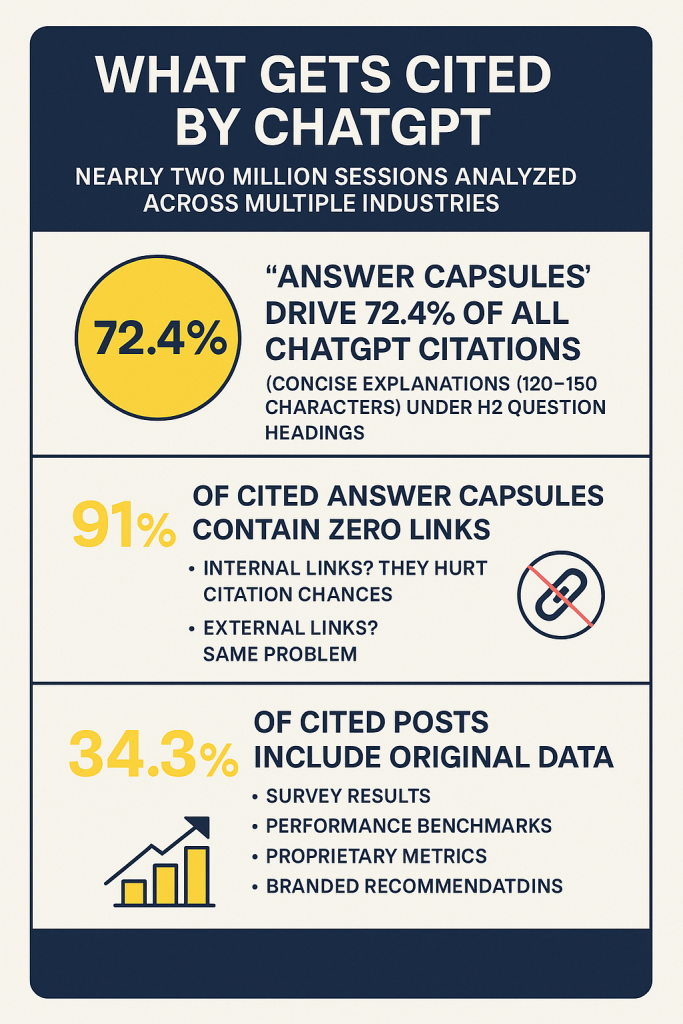

Matt Diggity shared a breakdown of nearly 2 million sessions worth of data analyzed across multiple industries. The goal: figure out which pieces of content Large Language Models (like ChatGPT) choose to cite when generating answers.

The findings? They flip traditional SEO best practices on their head.

Let’s break them down — and talk about how you can put this into play right now.

ChatGPT Wants “Answer Capsules” — Not Long-Winded Explanations

According to the study (published on Search Engine Land), 72.4% of all ChatGPT citations come from content that includes an “answer capsule.”

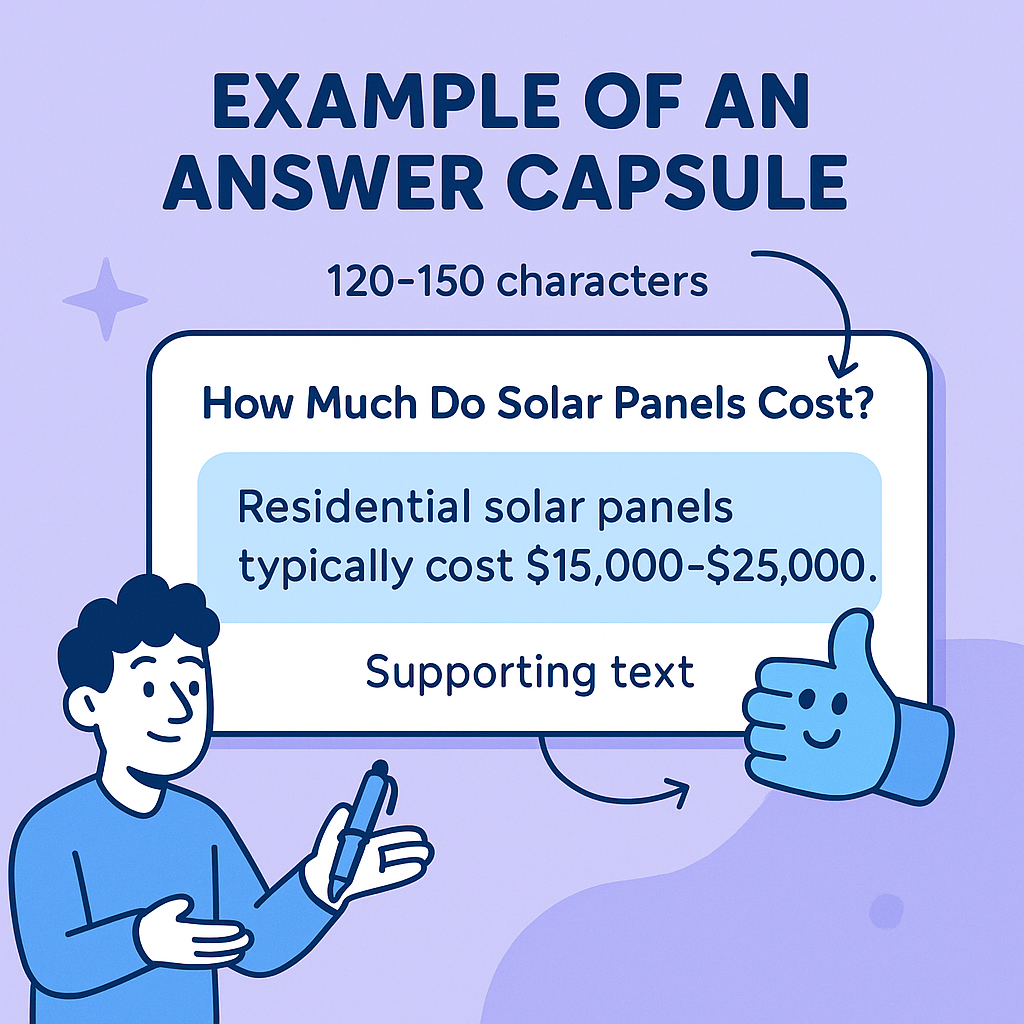

What’s an answer capsule?

Think of it as a mini-snippet you control:

- 120–150 characters

- No fluff

- Directly answers the question

- Placed immediately after an H2-based question

Basically, it’s a self-contained, ultra-clear response — and it acts like a neon sign for LLMs:

“Use this section. This is the answer.”

This is a massive opportunity because traditional SEO often pushes long intros, storytelling, and “value stacking” before giving readers the answer. LLMs have no patience for that. They want the answer first, clean and simple.

The Shocking Twist: 91% of Cited Answer Capsules Contained Zero Links

This surprised even me.

The study showed that internal links and external links inside the answer capsule drastically reduce the chance of ChatGPT citing that section.

Why?

Because links imply:

- The real answer is elsewhere

- This text is not self-contained

- This information depends on external context

LLMs prefer text that can stand alone. A link breaks that flow.

What to do instead:

- Keep the capsule clean — zero links

- Move links to supporting paragraphs

- Don’t interrupt the capsule with brackets, CTAs, or cross-links

This is a simple fix that can instantly make your content more LLM-friendly.

Original Data Supercharges Your Chance of Getting Cited

The study found that 34.3% of cited content included proprietary or original data.

This aligns perfectly with what we see inside ChatGPT trend analysis:

LLMs love:

- First-party data

- Branded metrics

- Survey results

- Industry benchmarks

- Unique analyses

- Insights not found anywhere else

OpenAI and other LLM providers prioritize content that adds something new to the web. If your content merely rephrases what already exists, it becomes less valuable to the model.

Examples of high-value original data:

- “90% of Phoenix homeowners wait too long to replace their AC filter — based on 2,500 service calls compiled by AZ Home Services.”

- “Our agency found that 63% of local service websites improved rankings after removing above-the-fold clutter.”

- “Brainspike Marketing measured a 41% increase in EEAT signals after adding expert quotes into service pages.”

Even one branded statistic can set your content apart.

Why LLMs Gravitate Toward Clean, Capsule-Based Answers

Beyond the study, here’s what we know from LLM architecture and behavior:

1. LLMs extract sections, not pages

They evaluate discrete blocks of text. Cleanly defined capsules are easier to detect and quote.

2. Shorter text blocks increase retrieval precision

150 characters is the sweet spot for semantic clarity.

3. External links break the internal logic of the text

If the text depends on external sources, the model downgrades it.

4. LLMs look for self-contained value

Answer capsules are like pre-packaged, ready-to-insert responses.

5. Proprietary data increases authority weight

Because LLMs try to avoid regurgitating generic content.

This is why adding a capsule is almost like adding a schema-powered snippet — it’s a structural cue the model recognizes instantly.

How Brainspike Marketing Is Implementing This

Here’s what I (Matt Hammerton) recommend — and what we’re already implementing for clients:

✔️ Add answer capsules after all question-based H2s

Perfect for FAQ-style blog posts, informational guides, and “how much does X cost?” articles.

✔️ Remove links from the capsule

Move them to supporting content below.

✔️ Add at least one piece of branded or proprietary data per article

Even a single line can boost LLM citation opportunities.

✔️ Re-optimize top-performing evergreen content

Especially pieces already ranking for question-based queries.

✔️ Build capsule-based SEO templates

This is the new “Featured Snippet Optimization” — but designed for AI.

Example of a High-Quality Answer Capsule

H2: How Much Does Furnace Repair Cost in Gilbert, AZ?

Answer Capsule (120–150 characters):

“Most furnace repairs in Gilbert cost $150–$550 depending on the part, age of the system, and urgency of the call.”

Real-World Case Studies Supporting This Trend

📌 Case Study 1: Healthline’s Snippet Dominance

Healthline uses clean, link-free mini-summaries under H2s. LLMs cite them constantly because they’re structured like answer capsules (even before we called them that).

📌 Case Study 2: PwC and Deloitte Research Pages

Pages heavy on original data nearly always rank well in AI outputs. Their proprietary statistics act as authority signals to LLMs.

📌 Case Study 3: Local Home Services Blogs

Simple, clean Q&A sections outperform longer SEO-heavy content when fed into LLM retrieval systems. Capsule content gives models exactly what they need to reuse the text verbatim.

What This Means for SEO In 2025 and Beyond

Search isn’t dying — but the way content gets referenced is changing fast.

Your content now needs to:

- Help search engines

- Help humans

- Help AI generate answers

If you’re not optimizing for LLM citation, you’re leaving visibility on the table.

The brands that win the next wave of SEO will be the ones whose content gets quoted the most — not just ranked the highest.

This shift is already happening. The data makes it clear.

If You Want This Implemented on Your Site…

This is the new frontier of SEO, and if you want your business ahead of the curve, Brainspike Marketing can build this into your content strategy right now:

- Capsule-based content optimization

- Proprietary data development

- EEAT and LLM-ready formatting

- AI-aligned content structures

- High-authority industry insights

Just reach out — I’ll personally walk you through how to integrate answer capsules across your site and how to leverage this new research to win more visibility in both search and AI platforms.